Contents

Introduction:

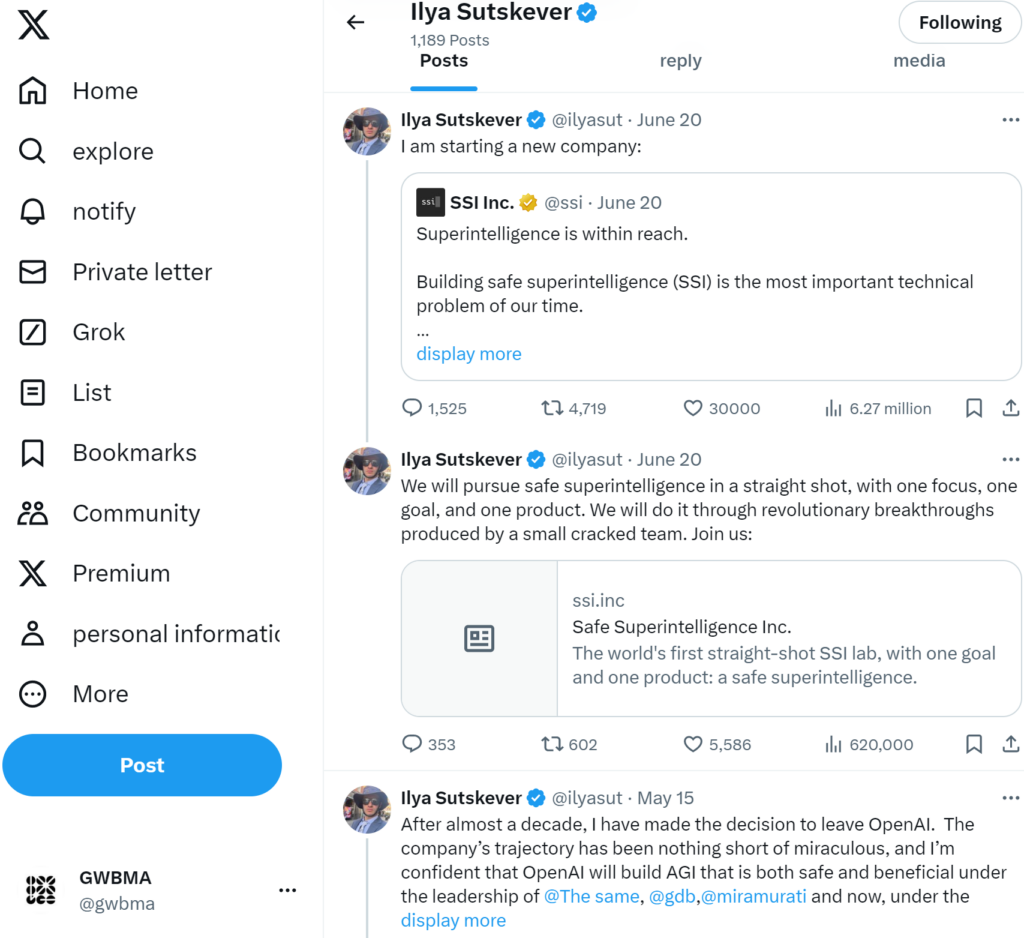

As I mentioned in my previous article: “I believe that Ilya Sutskever’s exit from OpenAI is his choice to lead his followers into new realms!” On June 20, 2024, former OpenAI Chief Scientist Ilya Sutskever announced on X the establishment of the Safe SuperIntelligence (SSI). His message was simple: “I am starting a new company.” Of course, I prefer it to be like the line from the new Terminator movie: ‘I’m back.’ Just kidding. Next, this article will elaborate on why it is necessary to establish a Safe SuperIntelligence Inc. as soon as possible for the emergence of AI.

Why Establish Safe SuperIntelligence: A Look Back

Who is Ilya Sutskever? He is the father of ChatGPT. Together with his mentor, Geoffrey Hinton, he published groundbreaking research on deep learning in artificial intelligence. Then, Sutskever met Sam Altman, and together they founded OpenAI. However, their relationship soured. In last year’s OpenAI drama, Sutskever attempted to vote out CEO Altman over safety concerns but failed. Finally, with the release of ChatGPT 4.o, Ilya Sutskever chose to leave OpenAI on May 15, 2024.

To the public, this event might seem like human love—marriage and then divorce. But what’s the core issue? This is just an AI, after all. Imagine parents having a newborn. The father believes the first step is to think about the child’s education and let it grow slowly. The mother, however, thinks this special child knows too much and should start making money right away. She wants the child to act in TV shows today and sing and dance tomorrow. The mother argues that making money is the priority, even if the child skips school. Over time, though money is made, the father becomes furious. Feeling deceived and seeing the child confused, he decides to take the child to school himself, leading to a divorce. However, as a dreamer, the father fails, and the child becomes a TV star backed by numerous investors, leaving the father alone.

The Emergence of Third-Party Safe SuperIntelligence: Challenges Facing AI

With rapid technological advancement, many issues surrounding AI have emerged. The most critical is the judgment of AI True vs. False. How to express this? Because AI is advanced beyond humans, it can quickly help when problems arise. However, AI is still like a newborn child, not yet capable of adult-level judgment and decision-making.

Moreover, immature AI entering the market is dangerous. For example, with phones and laptops, the latest AI technology quickly integrates into devices from Microsoft and Apple, becoming part of daily life. While convenient, this massive data collection poses hidden risks. For example, your signature.

Data privacy will become a major concern. While AI can help with many tasks, ensuring user privacy during data processing and storage is crucial. Any data leak could have disastrous consequences. Current AI technology is still evolving, with inevitable vulnerabilities that could be exploited.

The transparency and responsibility of AI decisions are also major challenges. Today’s AI systems often operate as a “black box,” making it difficult for users to understand how conclusions are reached. If AI makes a critical mistake, who is responsible? The developers, the users, or the AI itself? These issues need to be addressed alongside technological advancements with supporting laws and ethics.

The rapid spread of AI could also lead to significant societal changes. While AI can replace human labor and increase efficiency, it may also cause massive unemployment. Balancing AI integration with job security and adapting the education system to train AI-era talent are pressing issues.

Solutions from Third-Party Safe SuperIntelligence

Sutskever’s newly established Safe SuperIntelligence Inc. declares, “Our sole mission is to pursue sufficiently safe superintelligence, unaffected by short-term commercial pressures. Now is the time. Join us!” While not explicitly stated, this criticizes OpenAI’s excessive commercialization. Following his departure, key figures in OpenAI’s safety department also left, raising public concerns about the company’s safety. Meanwhile, Altman announced the establishment of a safety committee, but interestingly, he is both the CEO and a member of this committee.

Additionally, recent controversy between OpenAI and actress Scarlett Johansson has attracted widespread attention. The issue began when OpenAI released a voice assistant named “Sky,” which sounded very similar to Johansson. She expressed shock and anger, stating she never authorized the use of her voice and had previously declined collaboration offers from OpenAI.

OpenAI explained that “Sky’s” voice was provided by another professional actress. But do you believe this explanation? OpenAI decided to temporarily halt the use of “Sky’s” voice.

The initial problems at OpenAI could eventually affect everyone, which is frightening! However, we need not be overly concerned. Geoffrey Hinton, the greatest “Father of Deep Learning,” has made significant contributions to AI. In a New York Times interview, Hinton mentioned, “Despite significant progress in AI, it will take about 10 years for widespread application.” He explained that AI still needs to improve in handling complex tasks and understanding contexts. In simple terms, Hinton likens AI development to child growth. Today’s AI is like a smart child, capable of many things but not mature enough to fully replace adults. Just as children need time to learn and grow, AI needs time to advance and perfect. This process will take at least 10 years for AI to reach an adult level, performing reliably in various complex environments.

Conclusion:

We are tub our eyes and wait for!

Published by: Mr. Mao Rida. You are welcome to share this article, but please credit the author and include the website link when doing so. Thank you for your support and understanding.